Table of Contents

Motivation

Algorithm

Sampling

Augmented Reality Setup

Results

Future Work

Contributions

References

Source Code

Motivation

This project draws inspiration from the Instant Radiosity paper published by Keller in SIGGRAPH 97. There have been numerous enhancements to the basic algorithm such as bidirectional instant radiosity[4], radiosity in occluded environments[1] etc. Since both Chris and I perform research in augmented reality (AR), we thought it would be interesting to apply this technique to an AR environment.

There are a number of freely available implementations of Instant Radiosity available on the internet such as this and many others . These gave us an idea of the frame rates that we could expect on modern graphics cards (there was no shader support when the original paper was published). Our aim was to integrate this algorithm with an existing Game Engine for Augmented Reality (GEAR - developed at Augmented Environments Lab) and assess the visual impact on the AR experience. We use the OGRE graphics engine to render the polygons, point lights and cast shadows.

Algorithm

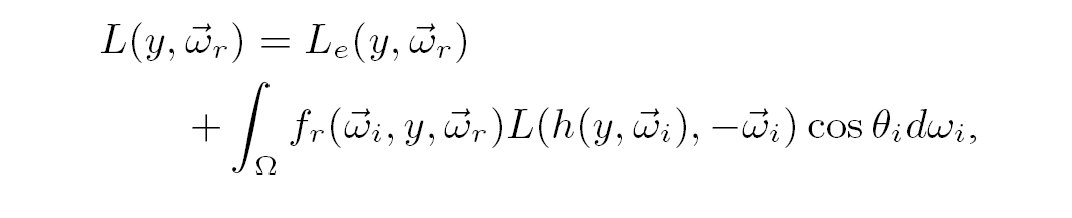

Radiosity is a global illumination algorithm that handles diffuse interreflections between surfaces.

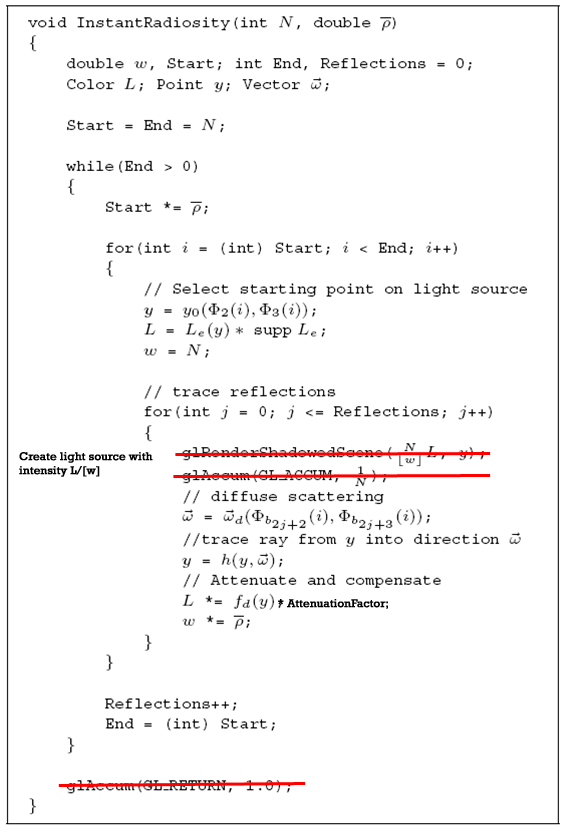

Instant Radiosity uses a Quasi-Monte Carlo approach to solve this integral and creates point light sources at each bounce for rays cast from the light source. If the light sources and resulting bounces are sampled adequately, this yields a good approximation of the global lighting in the scene. The core algorithm (with indicated modifications)is as follows:

The reason for these modification is as follows. We are using OGRE 1.2 as a rendering engine and it does not allow direct access to the accummulation buffer. To compensate for this, we attenuate each light source and render the scene once per light instead of rendering full intensity light sources and averaging the result (as in the paper). Further, we noticed that we needed to add an attenuation factor to lower the intensity of secondary light sources to make the scene look more realistic.

Sampling

Sampling is used to approximate an integral of a function as the average of the function evaluated at a set of points. Mathematically:

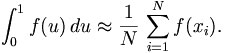

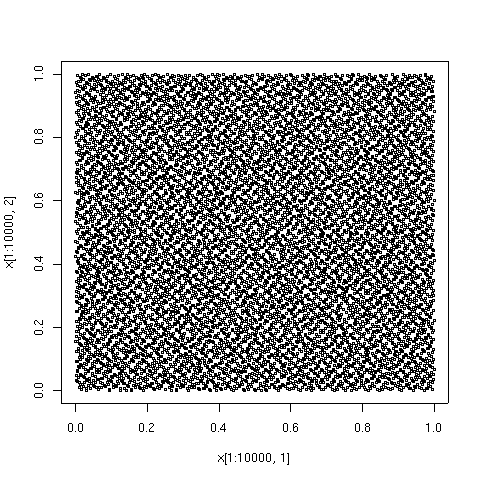

If Xi = i/N, the sampling is rectangular. If Xi is pseudo random or random, we call it as Monte Carlo sampling. If the sequence Xi has a low discrepancy, we term it as Quasi-Monte Carlo sampling. Loosely speaking, low discrepancy implies that a graphical representation of the sequence does not have regions of unequal sample density. For instance,consider the images below: the image to the left has low discrepancy.

Halton Sampling

Halton sampling is a Quasi Monte Carlo sampling technique that is deterministic. In 2D, it uses pairs of numbers generated from Halton sequences. These sequences are based on a prime number and can be constructed as follows: Pick a prime P and the number of desired samples N. Divide the interval [0,1] in this fashion: 1/p, 1/p^2, 2/p^2, ... p^2/p^2, 1/p^3 .. till N unique fractions are created. To generate a sample in 2D, pick primes P, Q ,generate the corresponding sequences and pair the numbers. It is recommended that the first 20 samples are discarded for higher primes due to a high correlation between those pairs. Our implementation is based on the pseudo code provided in [1]. We precompute these sequences for the first 50 primes and this is computationally efficient since we do not to regenerate these numbers for each bounce.

Poisson Disk Sampling

This is a form of Poisson sampling where samples are guaranteed to be separated by a specified distance (radius). There are numerous techniques to generate Poisson Disk Samples efficiently such as [8] and [9]. However, for a low number of samples, we used the Dart Throwing technique and cache results. To do this, we generate points and discard those that do not meet the radius criterion. This process is continued till N points are reached. In our implementation, we create different sets of samples such that the same set is used for a specific bounce. We believe that this is similar to the approach taken in the original paper where each reflection uses samples based on a set of primes (2j+2, 2j+3) where j is the reflection count.

Mapping

Picking the right sampling is the key to getting impressive results using IR. Sampling is used in two areas in this project: to pick points on the light source, and to choose direction to shoot rays from the selected point. Each of these requires a mapping from samples on a unit square to those on triangles or on hemispheres. Fortunately, these are described in Graphics Gems III (relevant page available on Google books). These samples need to be weighted based on the area of triangle. Further, there are other technical considerations that affect scene independent implementations. For instance, increasing the number of samples per light source will result in a brighter scene unless the intensity of the original samples are weighted accordingly. Similarly, in open environments,the intensity of each light needs to be attenuated by the total number of created lights (and not estimated hits).

Augmented Reality Setup

Our test system was the impressive Dell XPS H2C. The machine has an Intel Core 2 Extreme (quad-core) processor, 4 GB of memory and two NVIDIA 8800 GTX cards in SLI. This system ensured that we could get interactive frame rates with over 700 point light sources, 4x anti-aliasing and additive stencil shadowing.

We use a head mounted display (HMD) with a mounted video camera that captures the environment that the user is looking at. The feed is then sent to the computer which overlays our scene. The virtual environment is then displayed on the HMD. We also equip the user with a wand that controls the position of the light source. One could think of this as holding a lamp - the orientation does not affect the light but the light itself can be moved. We use the Intersense ultra-sound tracker to retrieve this position data for both the band and HMD.

Results

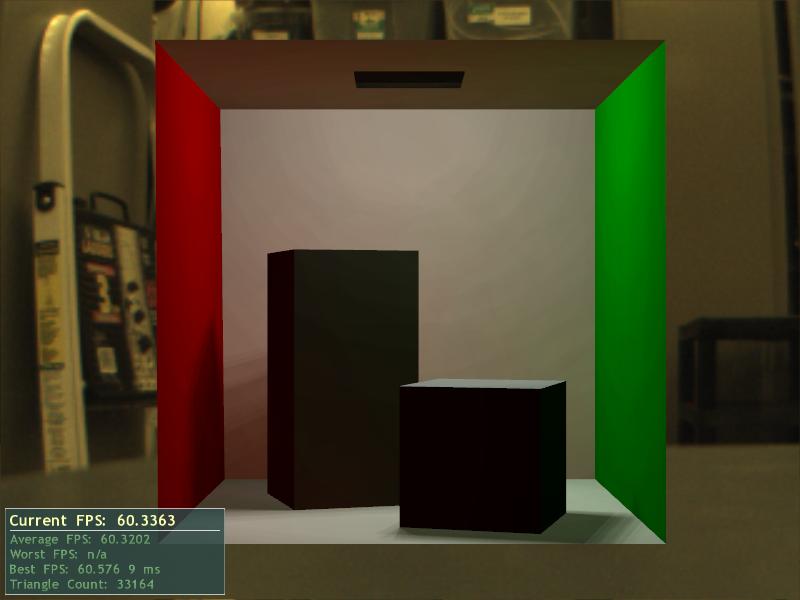

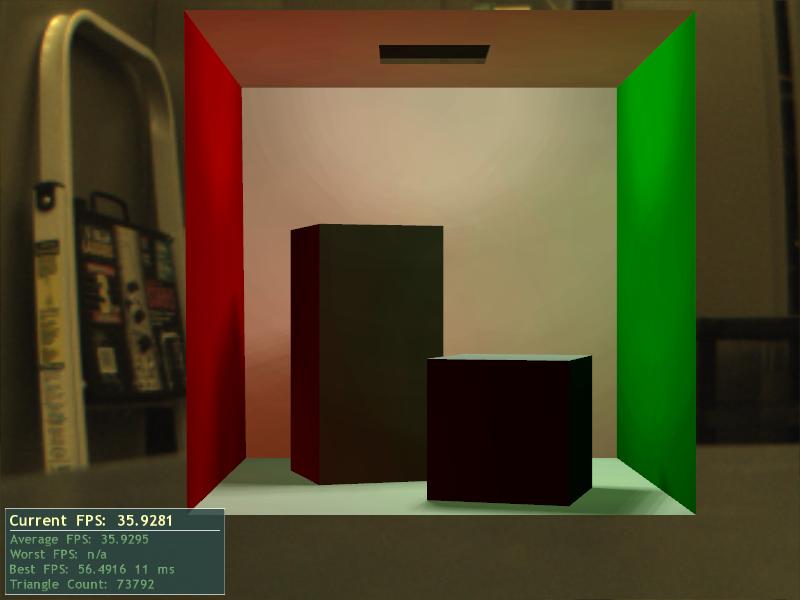

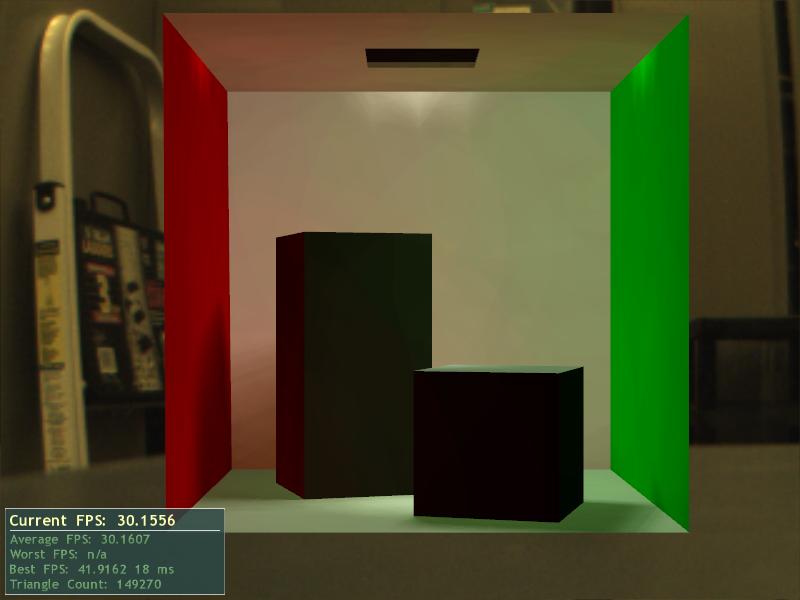

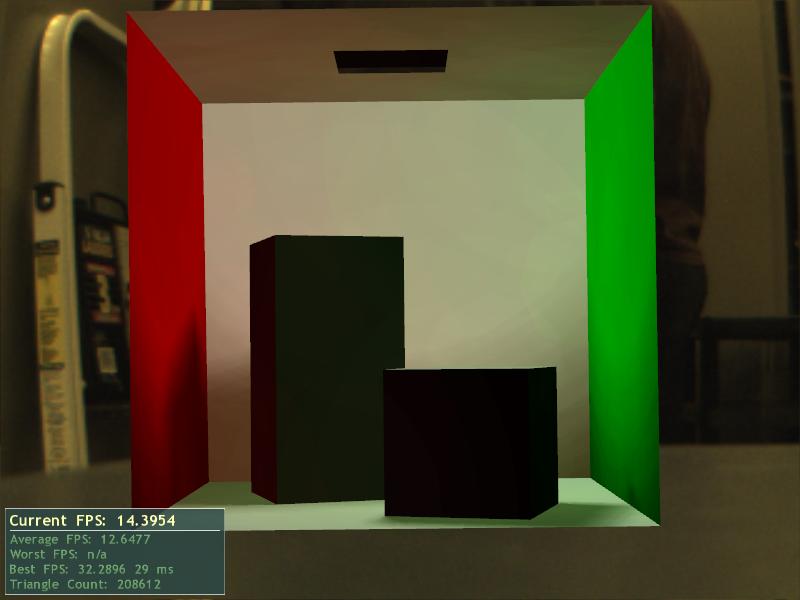

We analyze the effect of the various parameters on the quality of the rendered image. We had interactive frame rates for N between 16 and 128. However, increasing N further brought the system to a halt (>1000 point light sources).

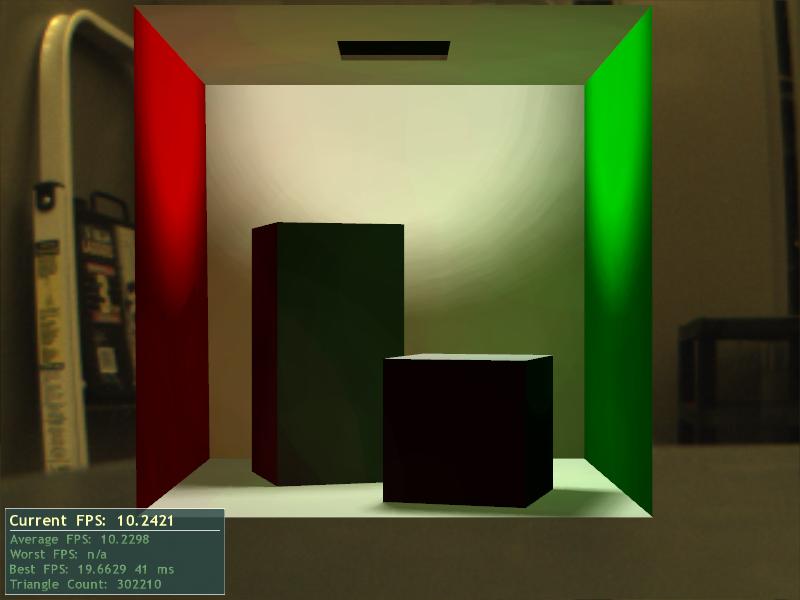

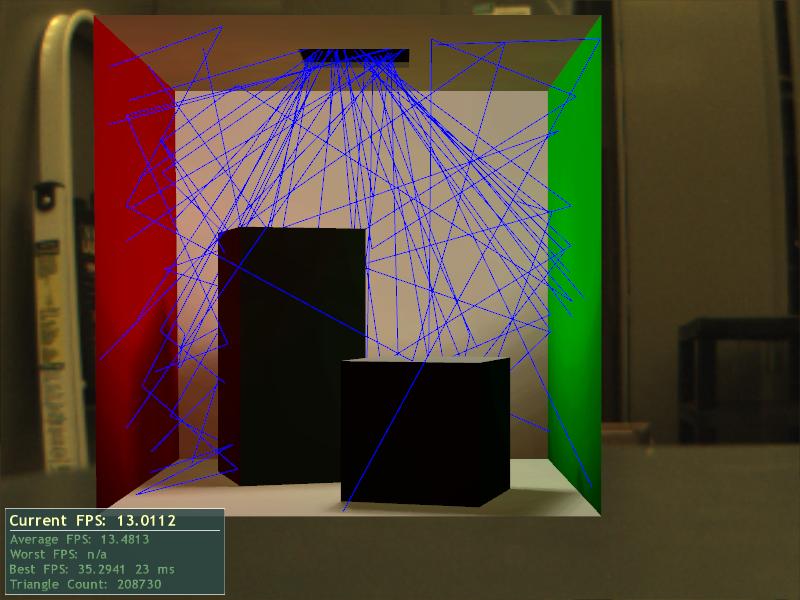

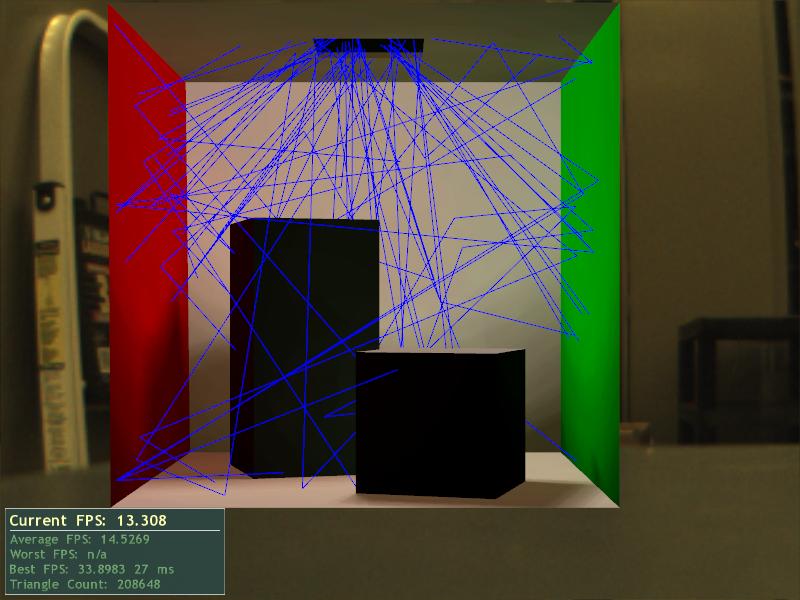

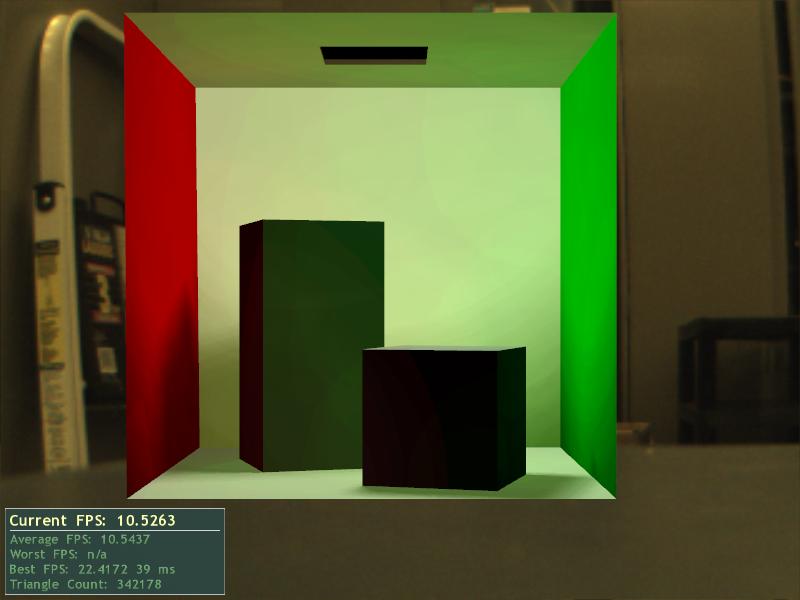

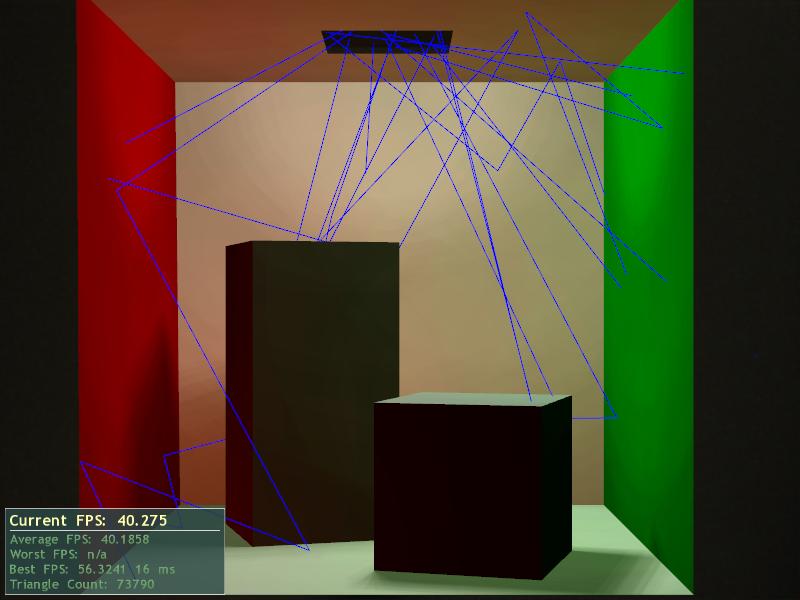

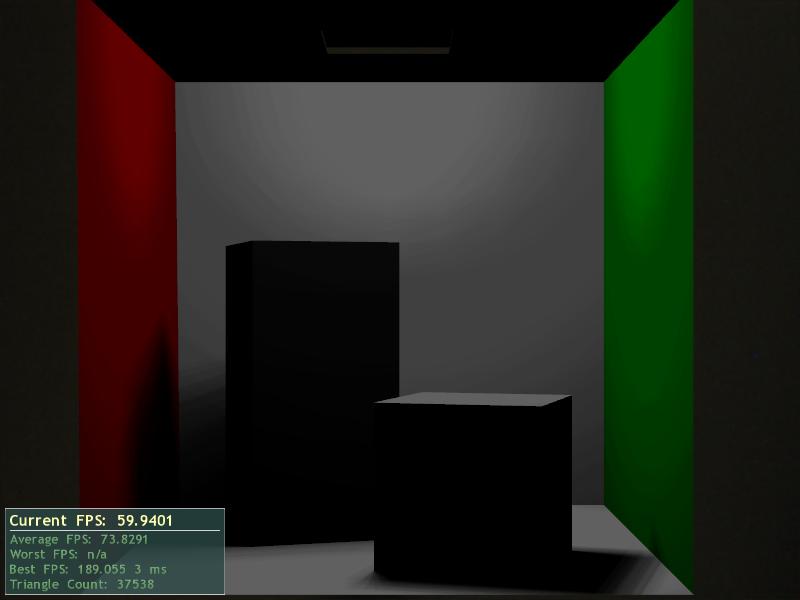

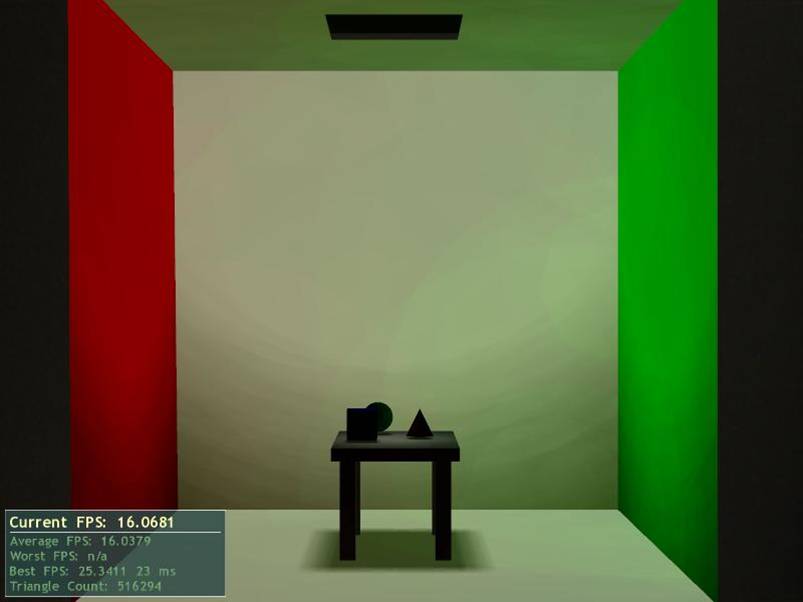

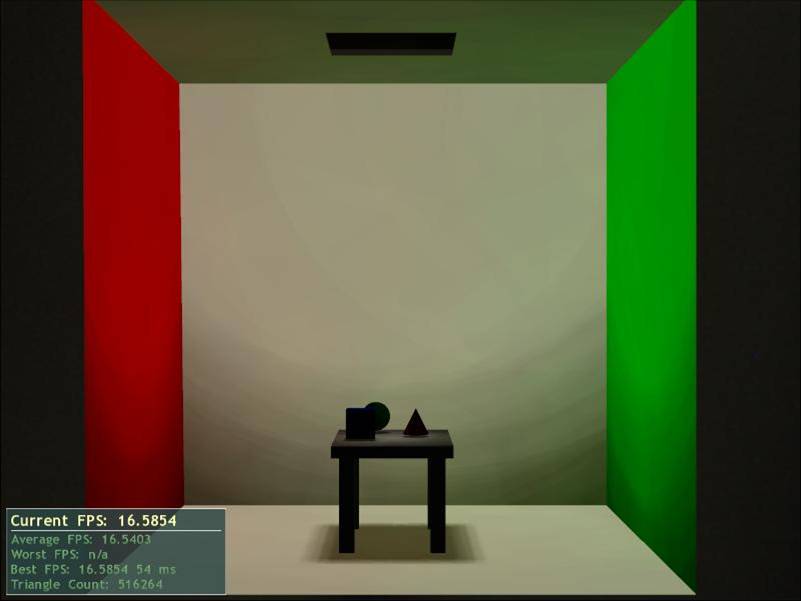

We use the Cornell Box as our scene and test the effect of changing N for Halton sampling. From left to right: N = 16, 32, 64, 90, 128. We noticed that there was excessive illumination on the walls at N=128 and we attribute this to OGRE's point lights. At N = 64, we see some bright spots due to point light sources being too close to two surfaces. This disappears for N = 75 (not shown), indicating that the positions were a result of the sampling.

In the next set of images, we observe the variations in the scenes due to Poisson Disk Sampling. This sampling technique did not yield the results that we had hoped for. It required a lot of tweaking such as setting a fixed seed and carefully adjusting N, Rho and the attenuation factor to obtain good looking results. We believe that this is due to the choice of seed for random number generation. The radius might also need to be increased to get better results. These images were produced with N = 90, Rho = 0.5774. The first image is a reference using Halton sampling for the same N and Rho. Notice that some scenes have excessive red or green bleeding due to more samples in that direction.

As a consequence, we focussed our efforts on generating results using Halton sampling. The following images were generated with Rho = 0.75 (more bounces). The first image has N = 90, Rho = 0.5774 (the suggested value in the paper). The last image has N=90, Rho = 0.25 (very few bounces). Notice that the scene gets brighter as the number of bounces increases with greater N. This leads us to believe that Rho plays a greater role than N in terms of overall lighting.

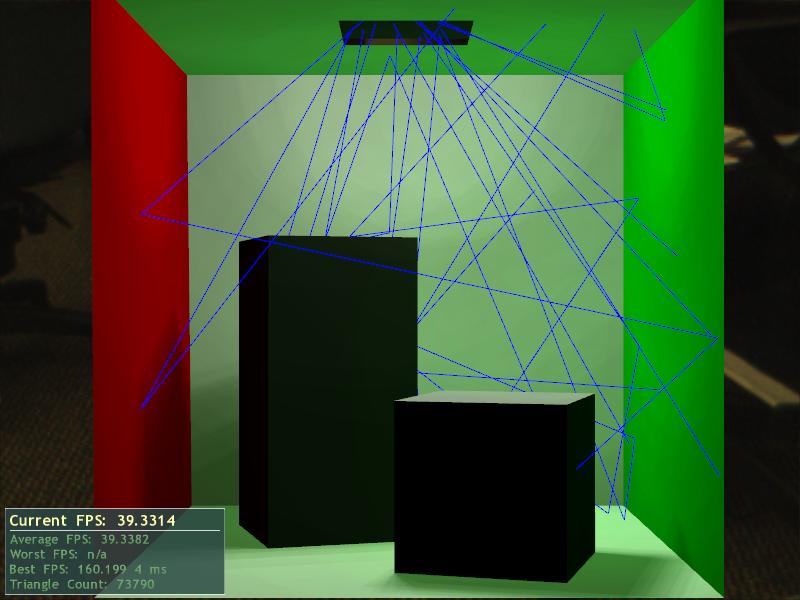

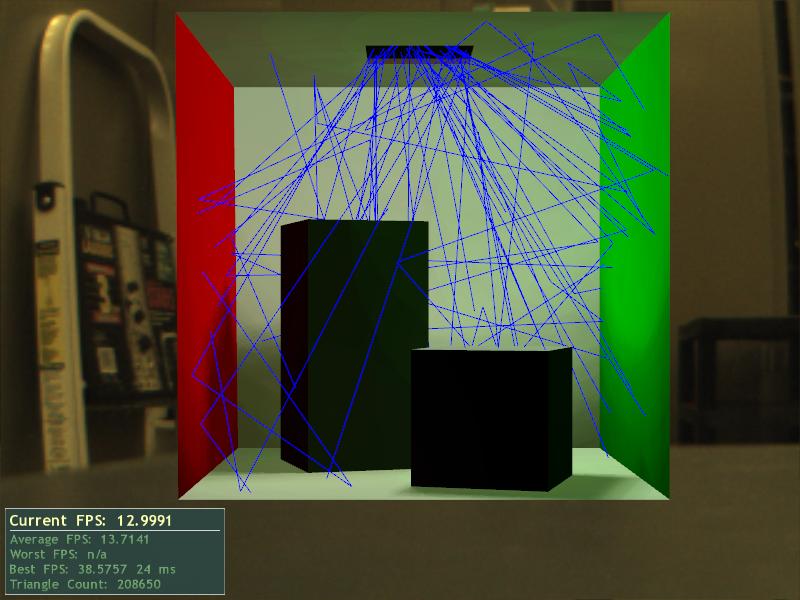

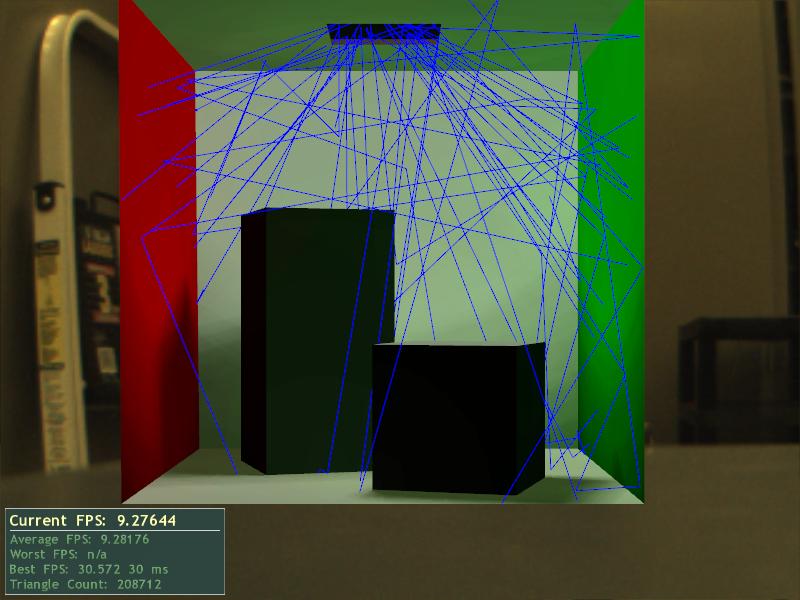

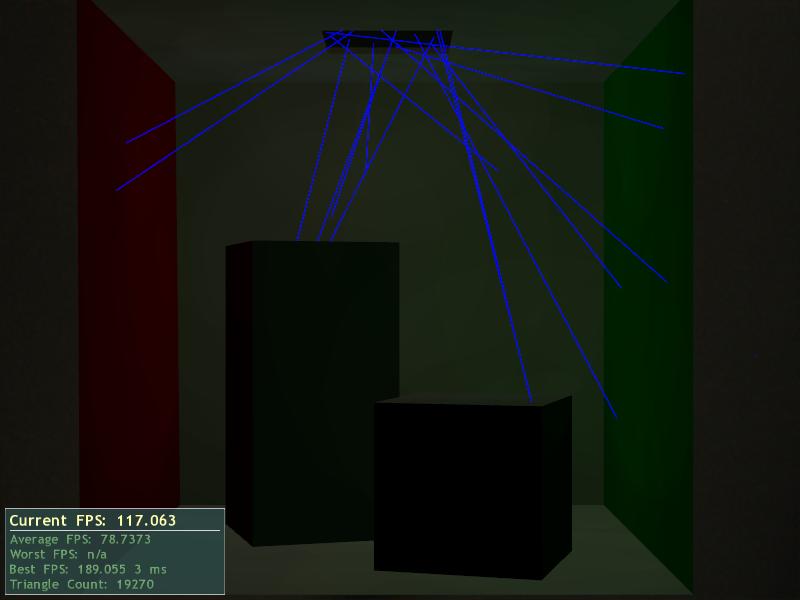

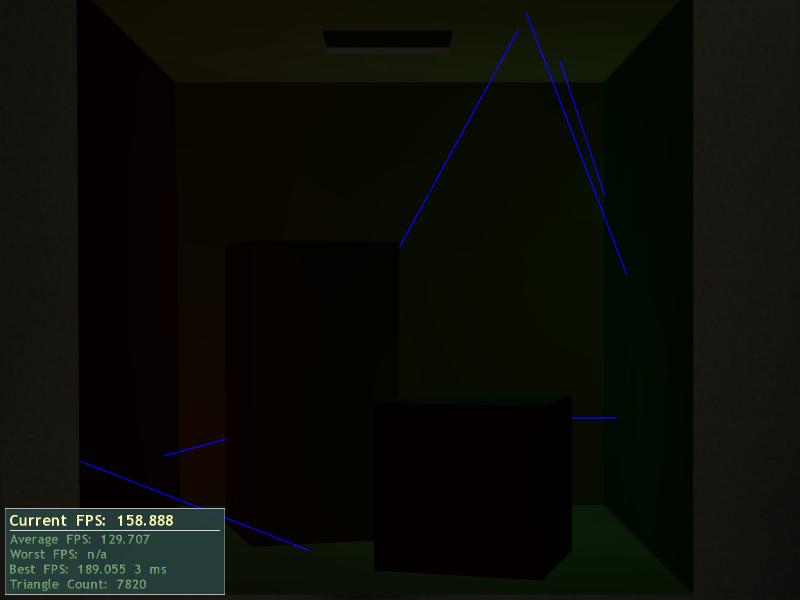

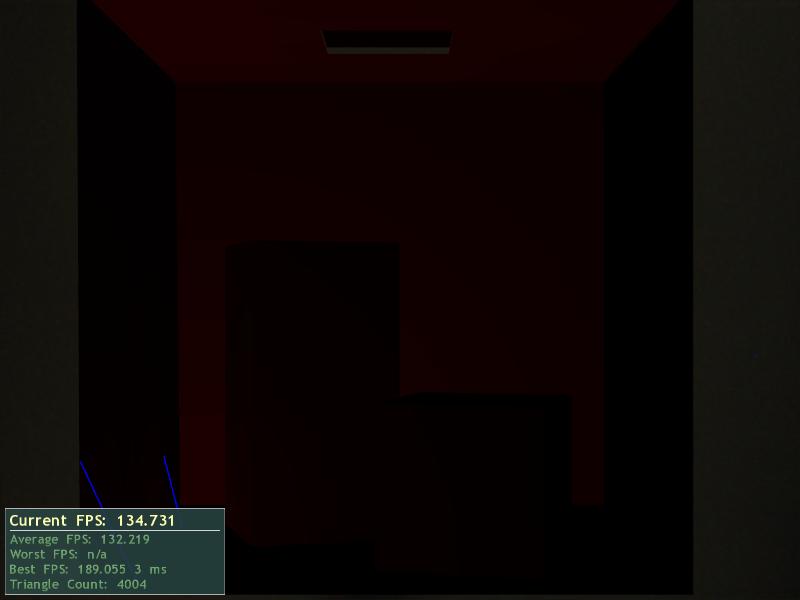

These images show the effect of each light pass for Halton sampling with N=32, Rho = 0.5774. The first image is that of the lit scene. The next image shows only primary lights. The remaining indicate the lights created after each reflection.

Finally, we have some samples of images of a different scene rendering using Halton and Poisson Disk Sampling:

We were able to obtain good results with a moving light source (predefined N,Rho) by tracking the wand. Observing the scene through the HMD display helped us identify banding artifacts that would have been harder to spot using a normal display. We were able to fix those by tessellating the shapes. AR Instant Radiosity is an exciting experience and video does not do much justice. Please feel free to drop us an email if you would like to try this out.

Note: The videos shown during the presentation have not been uploaded due to their file size. If I find a way of re-encoding them, I will post them.

Future Work

Our current implementation runs very well on our test system and produces results that are comparable to those in the original paper. There are many avenues for future work. One of the things that struck us was that not all point light sources need to be recomputed per frame even if the primary light source is moved. Indeed, this is the focus of a paper[7] that is to be published in this year's Eurographics conference. We would like to implement this to increase the frame rate and lower the processing load.

As has been mentioned in the results section, we did not have much control over the rendering passes in OGRE 1.2. However, the latest release of OGRE (Eihort) allows for texture shadows with access to the shaders. We would like to compare the two approaches using the new release. This will require a port of GEAR to OGRE 1.4 which is a significant undertaking.

Yet another area that should be investigated is bidirectional IR[5]. We believe that this will not require extensive modifications to the existing codebase and could improve visual quality of scenes where the light source is occluded by many objects.

Contributions

Much of the work in the project was done during joint programming sessions where we would work on related components, merge and test the code immediately. This helped us to create a version for the PC quickly. Both of us worked closely on the algorithm implementation and spent days on tweaking parameters to obtain realistic radiosity.

In terms of portions of the project that we approached independently, Chris wrote most of the code to integrate the augmented reality components with the IR implementation. He also coded the scene and material loaders, and the OGRE light setup. My work mainly focussed on implementing sampling techniques, and creating a reference OpenGL version to verify the sample generation and our modified algorithm against the version described in the paper.

We would like to extend our gratitude to Tobias Lang who suggested that we tessellate our models to obtain better lighting results.

References

1) A. Keller. Instant radiosity. Computer Graphics (SIGGRAPH '97 Proceedings), pages 49--55, 1997

2) Benthin, C., I.Wald, and P. Slusallek (2003). A Scalable Approach to Interactive Global Illumination. Computer Graphics Forum 22(3), 621-630. (Proceedings of Eurographics)

3) Wald, I., C. Benthin, and P. Slusallek (2003, June). Interactive global illumination in complex and highly occluded environments. In Eurographics Symposium on Rendering: 14th Eurographics Workshop on Rendering, pp. 74-81

4) Matt Pharr, Instant Global Illumination

5) B. Segovia, J. C. Iehl, R. Mitanchey and B. Peroche. Bidirectional Instant Radiosity,

Eurographics Symposium on Rendering (2006)

6) Thomas Kollig and Alexander Keller, Efficient Bidirectional Path Tracing

by Randomized Quasi-Monte Carlo Integration

7) Samuli Laine, Hannu Saransaari et al. Incremental Instant Radiosity for

Real-Time Indirect Illumination, Eurographics Symposium on Rendering 2007

8) Daniel Dunbar, Greg Humphreys, A Spatial Data Structure for Fast Poisson-Disk Sample Generation, SIGGRAPH 2007 Proceedings

9) Thouis R. Jones, Efficient Generation of Poisson-Disk Sampling Patterns

Source Code

The source code for the AR implementation can be downloaded from here . The source for the simple fixed view and slow OpenGL version can be obtained here .

Please note that the OpenGL version was created to verify some of our results and is unoptimized. I plan to work on that over the vacation. It reads data from simple source files that contains descriptions of quadrilaterals of the scene and the light (format: number of quads, color of quads and vertices of quads). Counter clockwise triangles are created from vertices 0,1,2 and 0,2,3 respectively. You might need to change the path in the source file to point to the location where you copied them.